Generator it is! where to start?

So we decided on building a code generator (Q1 2020) and we want to generate serverless applications for the AWS platform, how do we do that? Well, first we have to determine how to generate code, and then templating springs to mind. Although we only recently migrated our runtime code from NodeJS to Python, our prototypes for the generator have always been developed in Python.

Workload characteristics

Generating code has a batch-like character to it, you have a predefined set of work, this will be your model. And at some point in time, when you want to build your project, you extract your model, transform it into runnable code and load it into your repository (ETL).

It is not a streaming application with continuous loads, it even has a very procedural feel to it. It reminded me of the kind of automation I build during my master thesis, it was at the bioinformatics department at Radboud University and we automated everything in Python, why in Python? Well, everybody was using it so the availability of scientific packages and integrations with academic software is enormous. And when you start building software in Python you can see why the language is so popular, it is just comprehensive and you can learn the basics in one afternoon.

So Python stuck with me, when you have a clear goal in mind it is best to stick with a language you are comfortable with unless your goal is learning a new language or concept. So for me, this means that the best fit for building a generator is Python or Java in combination with Spring Boot. I love Spring Boot, but in my mental model, I use this tool for interactive web applications or complex business applications with a diversity of workloads. So with my skillset, Python has the better fit for the question at hand, build a code generator.

We selected Jinja2 as a template engine, and the maturity is phenomenal, and as it turns out, generating code is quite easy. Figuring out what to generate, not so much.

What we learned

In 2020 we build 3 iterations of our generator, just because getting the output right was hard. We were building a generator and learning how to do serverless at the same time.

So our confidence shifted from "should be easy" to "Errr" and now we stand on a "not easy, but very much possible". So shifting from traditional applications hosted on e.g. Tomcat or IBM to a cloud-native serverless model, here some highlights of what we learned.

Events are a first-class citizen

One of the things we learned about serverless is that you have to embrace an event-driven architecture to harness the full power of serverless. One of the reasons to go for serverless is cost reduction and this means "not paying for idle". You have to shift from traditional synchronous calls to asynchronous events to minimize waits.

Embrace Infra as Code

To minimize the wait states in your functions containing business logic, you have to treat them as in/out processors and shift orchestration to your infrastructure. The downside to this approach is that the complexity in your infra will increase significantly. In our experience, you cant treat infrastructure and application as 2 separate things. As Gregor Hohpe says, don't send a human to do a machine's job.

Pre-render queryable data

With serverless, your "machines" are not always ready to respond. This means, cold starts and therefore prerendering your queryable data may be a good idea. To guarantee a good user experience we make sure that our typical "GET" operations have no dependency on Function as a Service. This is not black and white, but I think it is a good rule of thumb.

The pattern we use to achieve decoupling between truth and projection is "command query responsibility segregation", you could use other patterns, but this fits our mental model.

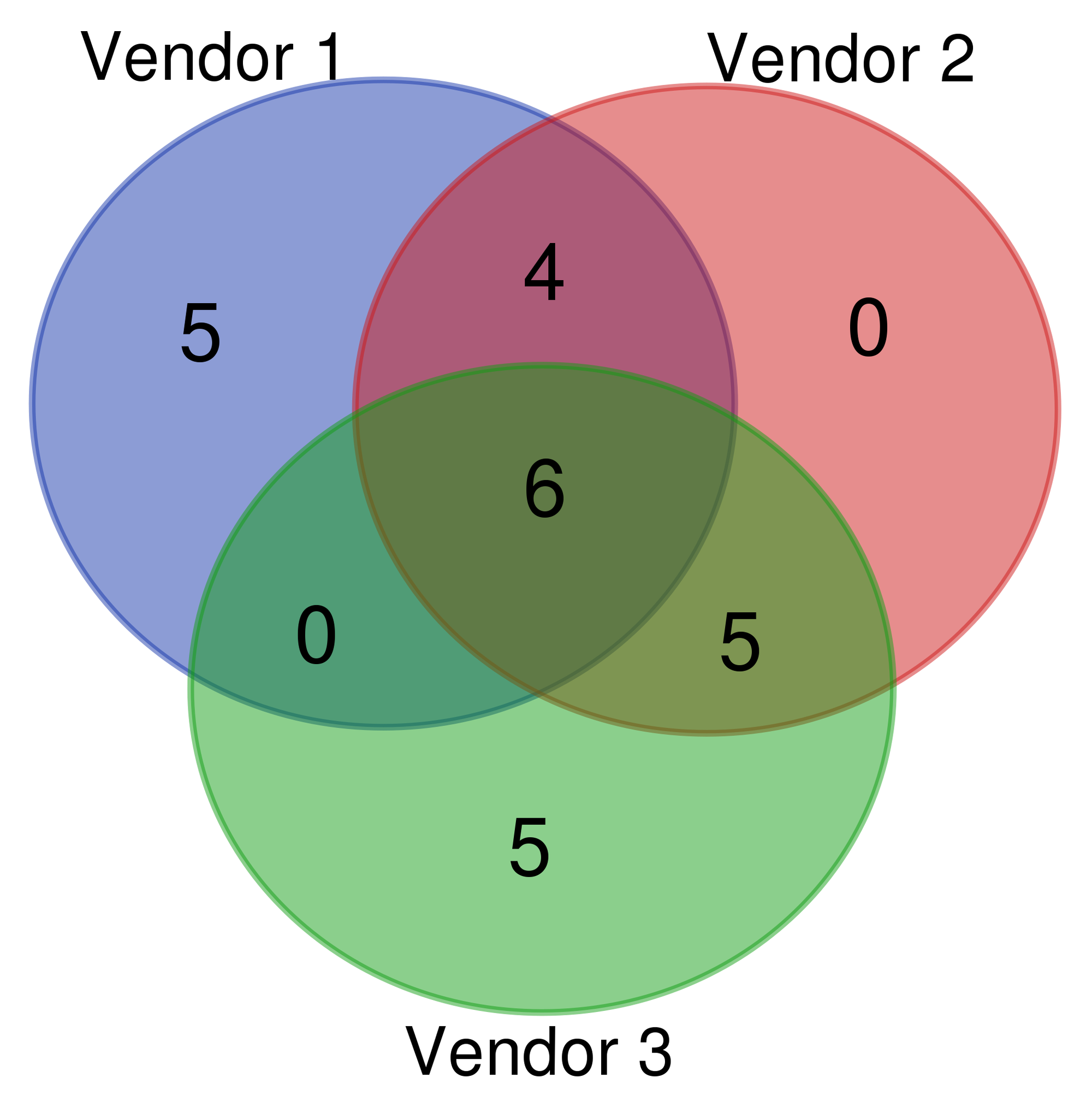

Lock-in

Don't be afraid of "Vendor Lock-In" because the truth is, coupling is not the same as lock-in. We do use a lot of managed services from AWS and we even use proprietary languages like cloud formation. But everything we build is transferable to another cloud or even on-prem, it just means work. The question is, is this a bad thing. I like the analogy "Architecture is selling options" by building a layer of abstraction on top of AWS I could make my solutions cloud-agnostic, this is an option that you buy. And the cost of doing so is very high, we would pay in development time and even worse in underutilizing the possibilities that the cloud brings us. Because to make the abstraction layer truly cloud agnostic we could only use the common denominator of all vendors.

In conclusion, this is a very expensive option to buy, just to minimize vendor-coupling, an option we are very unlikely ever going to use. Why is it unlikely? Well, the code is not the only thing that is coupled, you have also knowledge and experience. You could abstract as much as you want, but when you do decide to change vendor you have to update your skillset and probably this is a bigger effort than migrating the code in the first place because it is about people.

Being cloud-native is about the economies of speed and we are just not prepared in giving up speed in the form of underutilization to avoid lock-in.

Coupling is not the same as lock-in, a competitor that can iterate faster while you are avoiding lock-in can lock you out long before lock-in becomes an issue.

Closing note

To answer the question "Where to start?" well, we started by learning how to do serverless in the first place. By trial and error and admittedly sometimes more error than trial. It took us 3 iterations to get the basics right, iteration number 4 will focus on getting something sharable with the world. Thanks for reading.

The illiterate of the 21st century will not be those who cannot read and write, but those who cannot learn, unlearn, and relearn. - Alvin Toffler