Modeling in Tracepaper: Testing Aggregate Behavior

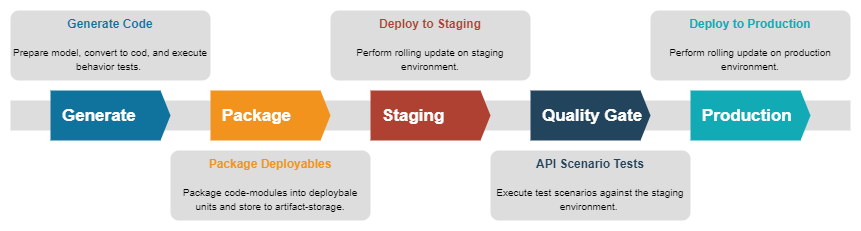

In the previous posts, we modeled domain behavior and created a trigger to start this behavior via a command. We continue the Modeling in Tracepaper series by focusing on the quality aspect. Some of it is already available in Tracepaper. Additionally, we will present some plans for the future. The concept overview visualizes the scope of this post.

Modeling is all fun and games until you break production. We need tests to prevent introducing breaking changes. As an architect, I'm interested in correct test coverage. As an engineer, writing tests is not an energizer for me.

Behavior Flows

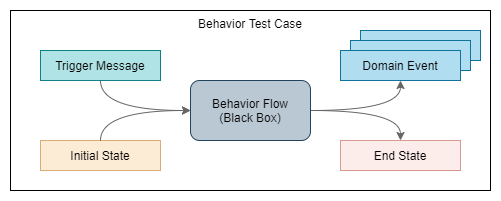

If we look at our behavior flows from a testing perspective, they are fairly simple.

A message triggers behavior, it may interact with the internal state for validations or extrapolations. And it may result in domain events and state changes.

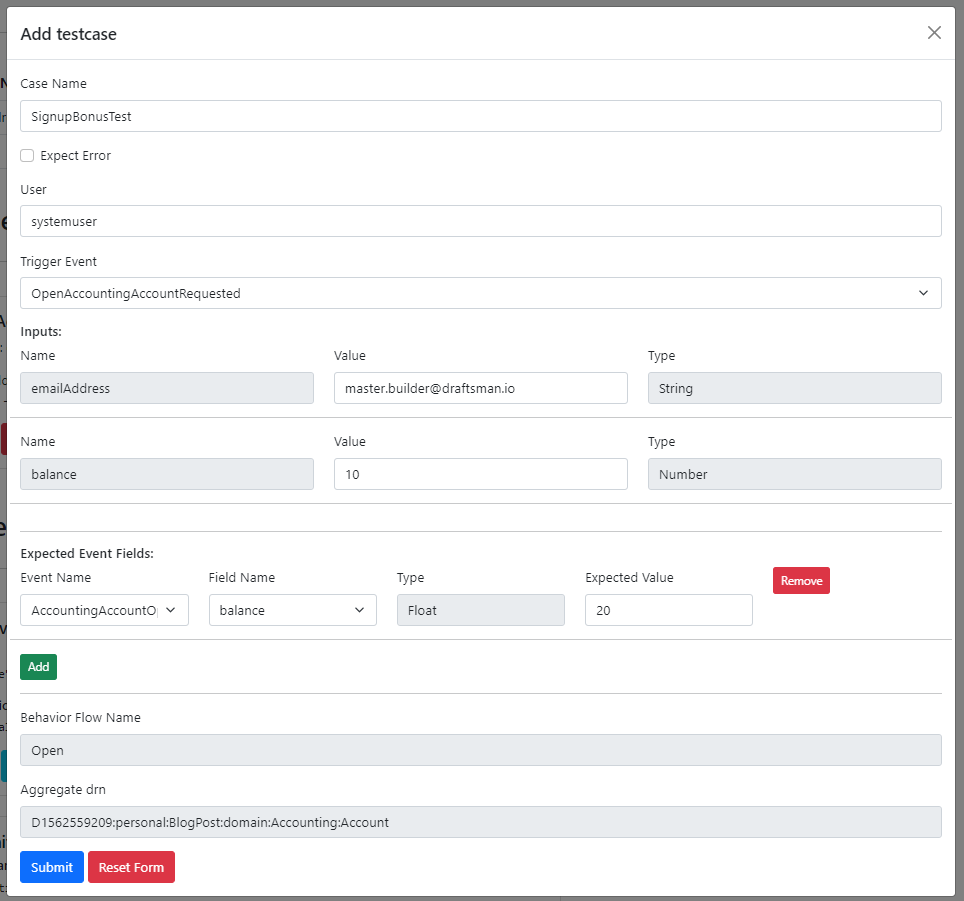

And that is how we model our tests, where the flow is considered a black box. We model the intention of the test case by declaring the state and the trigger and asserting that the expected messages are emitted. Optionally we check if the end state meets expectations. Every behavior flow should have at least one test case. This is enforced by the engine, it will reject a flow without test cases.

How many cases you need depends on the complexity of the flow. In Tracepaper, for example, we have 154 flows and 216 test cases. Most flows have just one test case because the complexity is low. Some flows have up to 5 test cases.

We have not yet figured out how to do proper test coverage calculation. We could use simple code-line coverage, but it feels lazy. It is more valuable to base coverage on the model:

- did we use all defined triggers?

- did we see all defined events emitted?

- did we see all modeled validation errors?

We know what we want, but we didn't take the time yet to implement it. It is on our backlog somewhere.

Notifiers

Compared to behavior flows, notifiers are a harder concept to test. I did not explain notifiers yet, we will take a look next week. For now, you have to take my word for it. Notifiers interact with (external) APIs, so testing them means we have to figure out how to mock these APIs.

We don't support tests for notifiers yet, but we use them a lot in Tracepaper (19 of them) and they do important things. Bluntly said, if we break the modeled notifiers Tracepaper is effectively worthless. How do we deal with this? We use a more expensive test method, end-to-end testing.

Test Scenarios

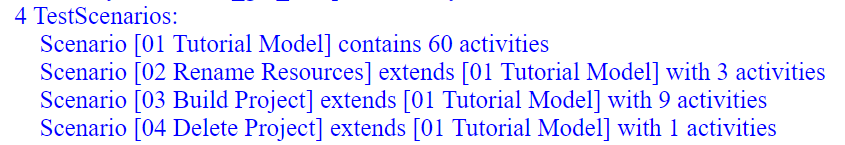

We have a concept called test scenarios, essentially a scenario is a sequence of API calls against the generated API. The API is generated and extracted from modeling concepts. So when modeling a scenario, we don't think about the API, we think about domain concepts: commands/behavior/notifier/view. In the background, it leans heavily on track and trace.

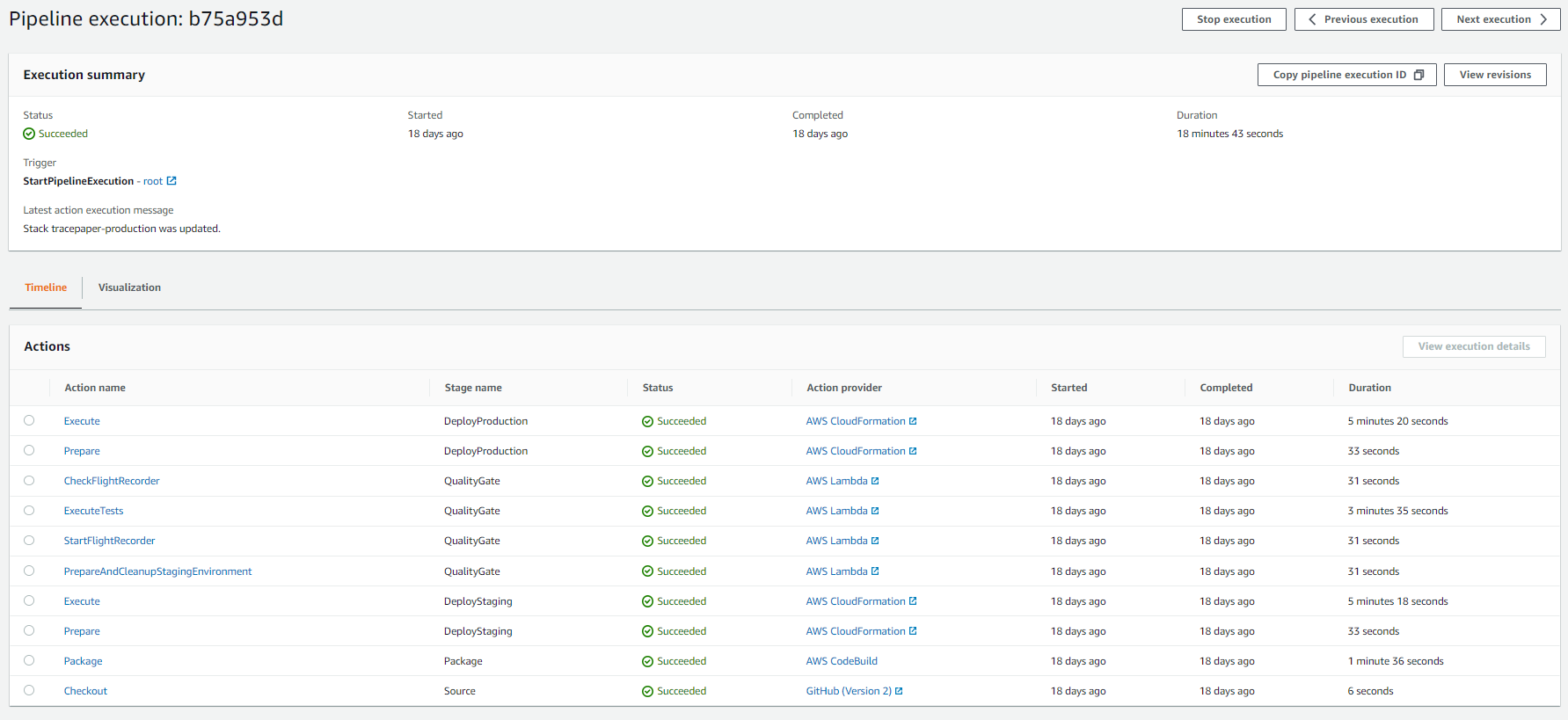

The scenario must be executed against a deployed version of the application, running these test scenarios is a pipeline responsibility.

We are alpha testing this feature at the moment, when we are happy about it we start adding scenario modeling to Tracepaper and making the extended pipeline available for general use.

A lot of plans, but what can you do at this moment in Tracepaper?

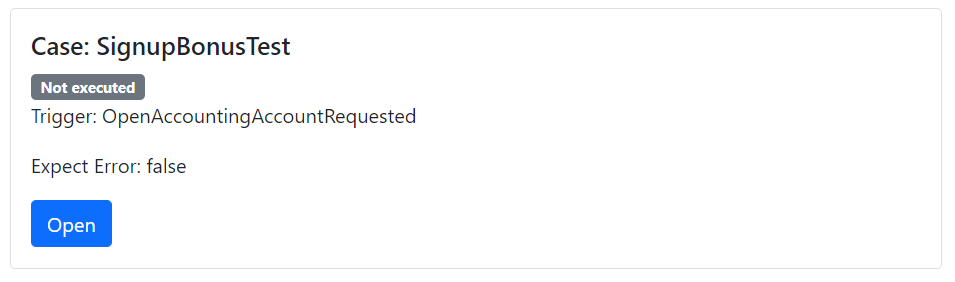

Truth be told, it is fairly limited. Sending in a trigger message and asserting that the expected domain events are emitted. It is very basic but good enough for our beta.

Here is a filter on our backlog so you know what to expect in the coming months.

Thank you for reading!