Over the Hill

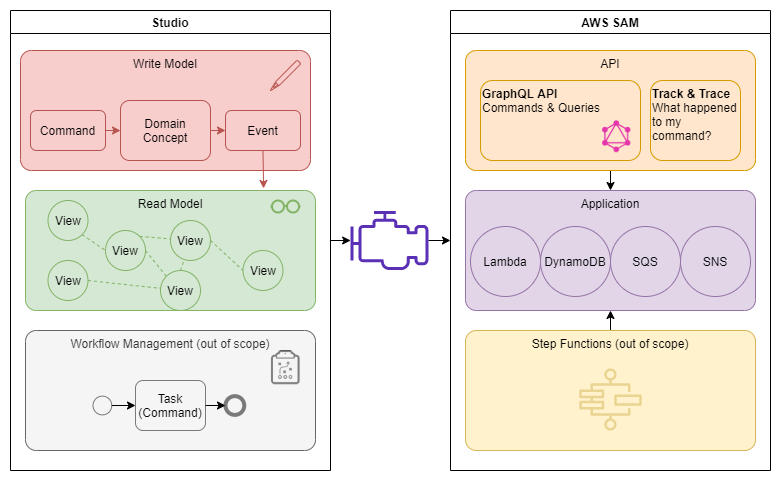

When we chose a cloud provider we also created a High-Level Design and used it to formulate a "shopping list" for vendor selection.

Time to add more fidelity to this design because in this state it is a very crude wish, "I want to model something and transform it into a cloud deployable application."

To clarify, in this post, we call the left-hand side "Model" and the right-hand side "Application". Now our wish becomes more tangible, as software engineers, we could start designing and building software. And we did just that 1 year ago, to get our hands dirty and learn about serverless. And while building 3 iterations our strategy emerged.

We build opinionated software, our modeling studio will guide you in a direction we think is best to model your software. Yes, this means our studio will be limited compared to other low-code environments. But we see this as a liberating constraint.

Freedom is not the absence of limitations and constraints but it is finding the right ones, those that fit our nature and liberate us - Timothy Keller

We learn to walk before we can run, sounds logical but how often we start building something as if it should "do" everything right from day one, without asking if it should. A feature like workflow management is very powerful and complex, if we are honest, do we need it on day one? No! We only experimented to prove we could do it, and then we shelved it.

We prefer managed services over customization, to achieve momentum and quality assurance. Our key constraint is being a 2 developer team, and sure, we could build everything from scratch. Hell, once I build an AppEngine just as a mental exercise (please don't break my server). But between building something that works, and building something that works, is maintainable, robust, and scalable. Yeah, two different things.

Our road to model interpretation

What changed in these 3 iterations? Our wish did not change, so it is all about implementation details.

Event bus

As an event-driven application, we rely heavily on event buses. And during conception, we started by using Simple Queue Service (SQS) for all our eventing. The dilemma with SQS in combination with lambda is the polling nature that is used for the queue subscriptions. This means that there will be calls to SQS even when there is no traffic to your application. And those calls are not free, and one of the things we aim for is minimizing idle cost. And you can imagine if the application matures, and the number of event handlers increases the cost will go up. To mitigate this we moved our event bus implementation from SQS to SNS (Simple Notification Service, think topics), this solved our predicament partially. The thing is, not every subscriber is interested in every event. And a rule of thumb in minimizing cost for serverless designs is, don't invoke functions if you are not interested in the result. And that is why we changed our event bus implementation yet again to use EventBridge. With EventBridge, we can do content-based filtering on the infrastructure level, amongst other things. It does shift complexity from our application logic to our infrastructure, but with serverless, you can't separate code from infra anyway.

GraphQL API

We made a choice fairly early on to use a GraphQL API as the gateway to our application, in a future post I will elaborate on why. The one-line summary "we think it has a good fit with our ideas about modeling".

Developers love frameworks, most of us anyway, and in the early days of our conception efforts, we used the Appolo framework. This framework plays nice with Lambda/NodeJS. It has some learning curve, but no bad word about it. At some point, we switch programming languages, and so we also selected another framework. We landed on Graphene, and most of the semantic experience gained with Appolo was transferable. But, we switched again, and this has nothing to do with missing features, bugs, or what else. Both are valid choices to build GraphQL interfaces and I would use them again... when I need to build a traditional server application. The reason why we migrated from "doing it in code" has to do with cold-starts. The GraphQL gateway is the single point in our application that has a direct user experience bound to it. Therefore speedy responses are a key concern for this component, and the fastest response I got during my limited setup was 150~200ms. When I encountered a cold start, the performance degraded to a full 3 seconds! And there are mechanisms to minimize cold starts with e.g provisioning. But this comes with a price tag, and it is no guarantee because as a startup we anticipate "infrequent-loads".

We migrated our GraphQL gateway to AWS AppSync, which is essentially a managed GraphQL service. Why didn't we use this from the start? Well in my humble opinion, the learning curve is quite steep. And the truth is, one year ago we had not enough knowledge about cloud formation, GraphQL, and the AWS environment in general, to comprehend how to configure AppSync. But now we do.

Draftsman Modeling Language

In our first iterations, we used JSON in combination with JSON schemas as our Draftsman Modeling Language (DML) we didn't put much effort into asking why, it just felt right... Until it didn't anymore, once the models became bigger they became unreadable. And although it is not meant to be read, it just doesn't feel right to stick with this decision. Therefore, for iteration 4 of the generator, we will flesh out an XML version for our Draftsman Modeling Language.

Python all the way

We switched programming language, but I think I wrote enough about this topic.

Where we stand now

I like the idea "work is like a hill" you have the uphill part, during this phase we talk in uncertainty. We ask questions, "could we do this? How would it work? Is it possible to do ...?" Once you get over the hill, we start speaking in actions, "we implement this and that, and we integrate X and Y". And at the moment, we just peeked over the top of the hill. We know what we are aiming for and we have an idea how to get there. It is not a matter of "could we do it" but more "how much time and effort are we willing to spend on this", which features are necessary for our MVP, and which features get left behind?

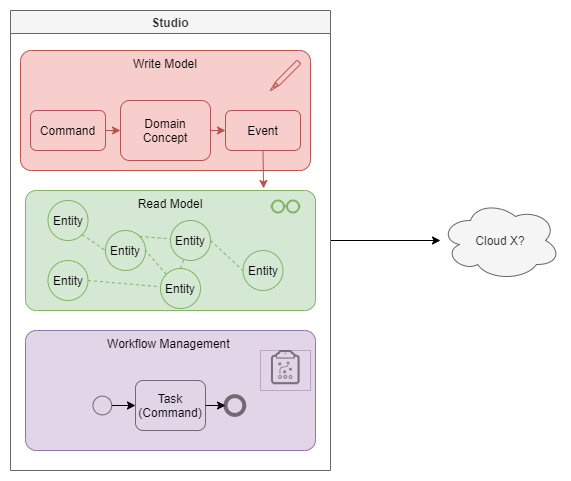

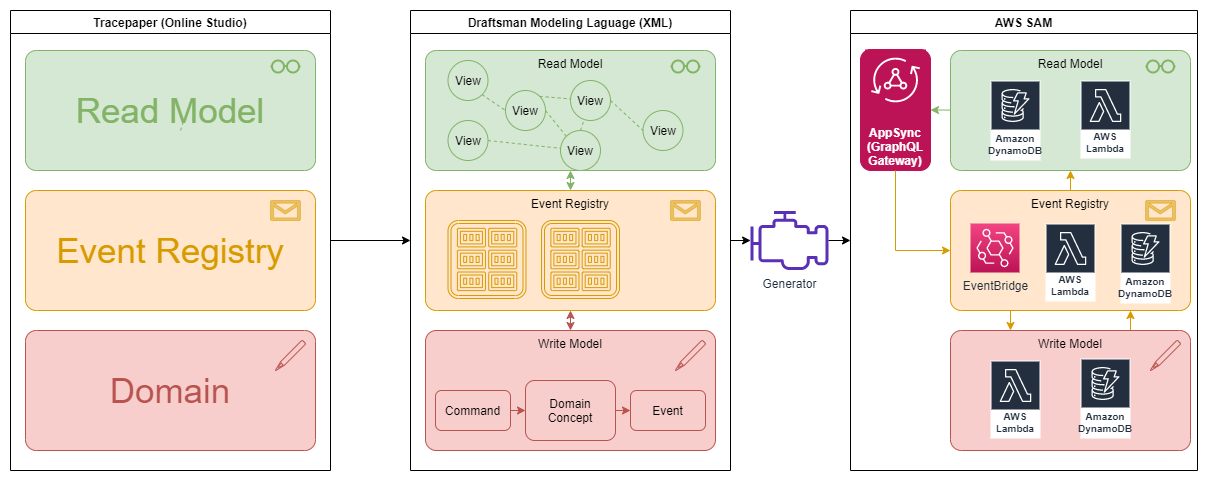

Updated HLD

So here is our latest High-Level Design for our Minimal Viable Product, as you can see we made Events a first-class citizen in this design. We also made a very clear distinction between reading and writing in our application, optimizing the command part for consistency and the query part for performance.

Direction

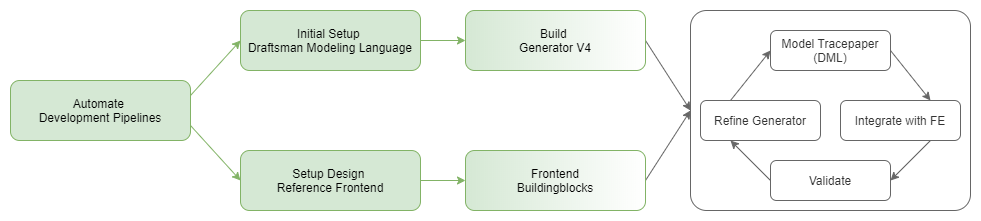

I find a roadmap too heavy a word because we make it up as we go. But we do have a direction we are following.

With the phrase eat your own dog food in mind, we intend to model the back-end for Tracepaper (our modeling studio) in Draftsman Modeling Language this enables us to validate our concepts before we even have to build the visual layer, cool right?